- Salesforce Data Loader Windows Download

- Salesforce Apex Data Loader

- Data Loader Salesforce Mac Download Softonic

- Data Loader Salesforce Mac Download Version

- Salesforce Data Loader 42

- Data Loader Salesforce Download Windows 10

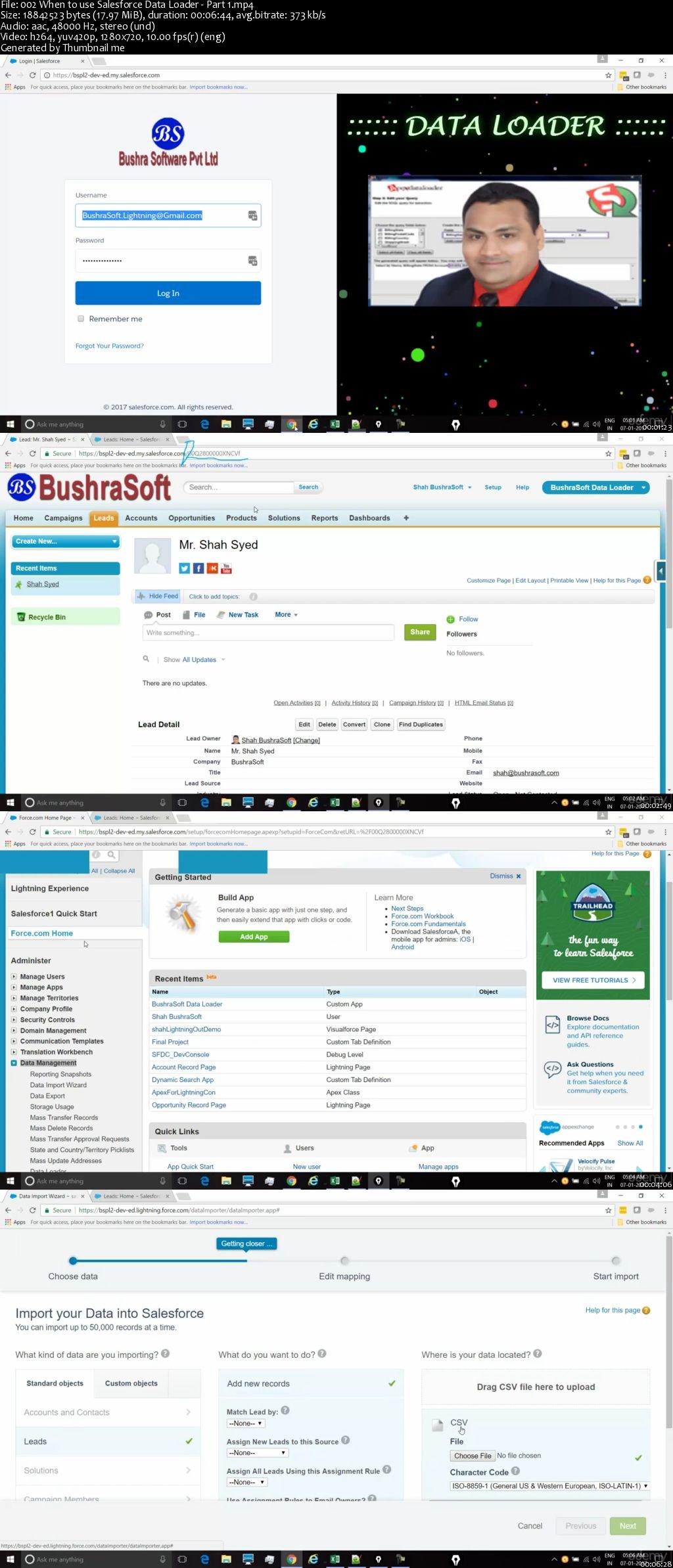

Describes how to use Data Loader, which is a client application for the bulk import or export of data. Use it to insert, update, delete, or export Salesforce or Database.com records. The latest version of Jitterbit Data Loader for Salesforce is 5.0 on Mac Informer. It is a perfect match for Automation in the System Tools category. The app is developed by Jitterbit. With the Summer '15 release the Data Loader, an easy-to-use graphical tool that helps you import, export, update, and delete Salesforce data, is now available for Mac OS. From Setup, download the Data Loader installation file. Right-click the.zip file and select Extract All. In the Data Loader folder, double-click the installer.command file. If you can’t open the file because of an unidentified developer message, press the Control key while clicking the installer.command file, and select open from the menu. I won’t get into how to use the Data Loader, but here are some things to know for this procedure. In “Step 2” of the Data Loader, make sure you tell Salesforce to Show All Objects to expose the “Attachments” object.

This article outlines how to use Copy Activity in Azure Data Factory to copy data from and to Salesforce. It builds on the Copy Activity overview article that presents a general overview of the copy activity.

Supported capabilities

This Salesforce connector is supported for the following activities:

- Copy activity with supported source/sink matrix

You can copy data from Salesforce to any supported sink data store. You also can copy data from any supported source data store to Salesforce. For a list of data stores that are supported as sources or sinks by the Copy activity, see the Supported data stores table.

Specifically, this Salesforce connector supports:

- Salesforce Developer, Professional, Enterprise, or Unlimited editions.

- Copying data from and to Salesforce production, sandbox, and custom domain.

The Salesforce connector is built on top of the Salesforce REST/Bulk API. By default, the connector uses v45 to copy data from Salesforce, and uses v40 to copy data to Salesforce. You can also explicitly set the API version used to read/write data via apiVersion property in linked service.

Prerequisites

API permission must be enabled in Salesforce. For more information, see Enable API access in Salesforce by permission set

Salesforce request limits

Salesforce has limits for both total API requests and concurrent API requests. Note the following points:

- If the number of concurrent requests exceeds the limit, throttling occurs and you see random failures.

- If the total number of requests exceeds the limit, the Salesforce account is blocked for 24 hours.

You might also receive the 'REQUEST_LIMIT_EXCEEDED' error message in both scenarios. For more information, see the 'API request limits' section in Salesforce developer limits.

Get started

To perform the Copy activity with a pipeline, you can use one of the following tools or SDKs:

The following sections provide details about properties that are used to define Data Factory entities specific to the Salesforce connector.

Linked service properties

The following properties are supported for the Salesforce linked service.

| Property | Description | Required |

|---|---|---|

| type | The type property must be set to Salesforce. | Yes |

| environmentUrl | Specify the URL of the Salesforce instance. - Default is 'https://login.salesforce.com'. - To copy data from sandbox, specify 'https://test.salesforce.com'. - To copy data from custom domain, specify, for example, 'https://[domain].my.salesforce.com'. | No |

| username | Specify a user name for the user account. | Yes |

| password | Specify a password for the user account. Mark this field as a SecureString to store it securely in Data Factory, or reference a secret stored in Azure Key Vault. | Yes |

| securityToken | Specify a security token for the user account. To learn about security tokens in general, see Security and the API. The security token can be skipped only if you add the Integration Runtime's IP to the trusted IP address list on Salesforce. When using Azure IR, refer to Azure Integration Runtime IP addresses. For instructions on how to get and reset a security token, see Get a security token. Mark this field as a SecureString to store it securely in Data Factory, or reference a secret stored in Azure Key Vault. | No |

| apiVersion | Specify the Salesforce REST/Bulk API version to use, e.g. 48.0. By default, the connector uses v45 to copy data from Salesforce, and uses v40 to copy data to Salesforce. | No |

| connectVia | The integration runtime to be used to connect to the data store. If not specified, it uses the default Azure Integration Runtime. | No for source, Yes for sink if the source linked service doesn't have integration runtime |

Important

When you copy data into Salesforce, the default Azure Integration Runtime can't be used to execute copy. In other words, if your source linked service doesn't have a specified integration runtime, explicitly create an Azure Integration Runtime with a location near your Salesforce instance. Associate the Salesforce linked service as in the following example.

Example: Store credentials in Data Factory

Example: Store credentials in Key Vault

Dataset properties

For a full list of sections and properties available for defining datasets, see the Datasets article. This section provides a list of properties supported by the Salesforce dataset.

To copy data from and to Salesforce, set the type property of the dataset to SalesforceObject. The following properties are supported.

| Property | Description | Required |

|---|---|---|

| type | The type property must be set to SalesforceObject. | Yes |

| objectApiName | The Salesforce object name to retrieve data from. | No for source, Yes for sink |

Important

The '__c' part of API Name is needed for any custom object.

Example:

Note

For backward compatibility: When you copy data from Salesforce, if you use the previous 'RelationalTable' type dataset, it keeps working while you see a suggestion to switch to the new 'SalesforceObject' type.

| Property | Description | Required |

|---|---|---|

| type | The type property of the dataset must be set to RelationalTable. | Yes |

| tableName | Name of the table in Salesforce. | No (if 'query' in the activity source is specified) |

Copy activity properties

For a full list of sections and properties available for defining activities, see the Pipelines article. This section provides a list of properties supported by Salesforce source and sink.

Salesforce as a source type

To copy data from Salesforce, set the source type in the copy activity to SalesforceSource. The following properties are supported in the copy activity source section.

| Property | Description | Required |

|---|---|---|

| type | The type property of the copy activity source must be set to SalesforceSource. | Yes |

| query | Use the custom query to read data. You can use Salesforce Object Query Language (SOQL) query or SQL-92 query. See more tips in query tips section. If query is not specified, all the data of the Salesforce object specified in 'objectApiName' in dataset will be retrieved. | No (if 'objectApiName' in the dataset is specified) |

| readBehavior | Indicates whether to query the existing records, or query all records including the deleted ones. If not specified, the default behavior is the former. Allowed values: query (default), queryAll. | No |

Important

The '__c' part of API Name is needed for any custom object.

Example:

Note

For backward compatibility: When you copy data from Salesforce, if you use the previous 'RelationalSource' type copy, the source keeps working while you see a suggestion to switch to the new 'SalesforceSource' type.

Salesforce as a sink type

To copy data to Salesforce, set the sink type in the copy activity to SalesforceSink. The following properties are supported in the copy activity sink section.

| Property | Description | Required |

|---|---|---|

| type | The type property of the copy activity sink must be set to SalesforceSink. | Yes |

| writeBehavior | The write behavior for the operation. Allowed values are Insert and Upsert. | No (default is Insert) |

| externalIdFieldName | The name of the external ID field for the upsert operation. The specified field must be defined as 'External ID Field' in the Salesforce object. It can't have NULL values in the corresponding input data. | Yes for 'Upsert' |

| writeBatchSize | The row count of data written to Salesforce in each batch. | No (default is 5,000) |

| ignoreNullValues | Indicates whether to ignore NULL values from input data during a write operation. Allowed values are true and false. - True: Leave the data in the destination object unchanged when you do an upsert or update operation. Insert a defined default value when you do an insert operation. - False: Update the data in the destination object to NULL when you do an upsert or update operation. Insert a NULL value when you do an insert operation. | No (default is false) |

Example: Salesforce sink in a copy activity

Query tips

Retrieve data from a Salesforce report

You can retrieve data from Salesforce reports by specifying a query as {call '<report name>'}. An example is 'query': '{call 'TestReport'}'.

Retrieve deleted records from the Salesforce Recycle Bin

To query the soft deleted records from the Salesforce Recycle Bin, you can specify readBehavior as queryAll.

Difference between SOQL and SQL query syntax

When copying data from Salesforce, you can use either SOQL query or SQL query. Note that these two has different syntax and functionality support, do not mix it. You are suggested to use the SOQL query, which is natively supported by Salesforce. The following table lists the main differences:

| Syntax | SOQL Mode | SQL Mode |

|---|---|---|

| Column selection | Need to enumerate the fields to be copied in the query, e.g. SELECT field1, filed2 FROM objectname | SELECT * is supported in addition to column selection. |

| Quotation marks | Filed/object names cannot be quoted. | Field/object names can be quoted, e.g. SELECT 'id' FROM 'Account' |

| Datetime format | Refer to details here and samples in next section. | Refer to details here and samples in next section. |

| Boolean values | Represented as False and True, e.g. SELECT … WHERE IsDeleted=True. | Represented as 0 or 1, e.g. SELECT … WHERE IsDeleted=1. |

| Column renaming | Not supported. | Supported, e.g.: SELECT a AS b FROM …. |

| Relationship | Supported, e.g. Account_vod__r.nvs_Country__c. | Not supported. |

Retrieve data by using a where clause on the DateTime column

When you specify the SOQL or SQL query, pay attention to the DateTime format difference. For example:

Salesforce Data Loader Windows Download

- SOQL sample:

SELECT Id, Name, BillingCity FROM Account WHERE LastModifiedDate >= @{formatDateTime(pipeline().parameters.StartTime,'yyyy-MM-ddTHH:mm:ssZ')} AND LastModifiedDate < @{formatDateTime(pipeline().parameters.EndTime,'yyyy-MM-ddTHH:mm:ssZ')} - SQL sample:

SELECT * FROM Account WHERE LastModifiedDate >= {ts'@{formatDateTime(pipeline().parameters.StartTime,'yyyy-MM-dd HH:mm:ss')}'} AND LastModifiedDate < {ts'@{formatDateTime(pipeline().parameters.EndTime,'yyyy-MM-dd HH:mm:ss')}'}

Error of MALFORMED_QUERY: Truncated

If you hit error of 'MALFORMED_QUERY: Truncated', normally it's due to you have JunctionIdList type column in data and Salesforce has limitation on supporting such data with large number of rows. To mitigate, try to exclude JunctionIdList column or limit the number of rows to copy (you can partition to multiple copy activity runs).

Data type mapping for Salesforce

When you copy data from Salesforce, the following mappings are used from Salesforce data types to Data Factory interim data types. To learn about how the copy activity maps the source schema and data type to the sink, see Schema and data type mappings.

| Salesforce data type | Data Factory interim data type |

|---|---|

| Auto Number | String |

| Checkbox | Boolean |

| Currency | Decimal |

| Date | DateTime |

| Date/Time | DateTime |

| String | |

| ID | String |

| Lookup Relationship | String |

| Multi-Select Picklist | String |

| Number | Decimal |

| Percent | Decimal |

| Phone | String |

| Picklist | String |

| Text | String |

| Text Area | String |

| Text Area (Long) | String |

| Text Area (Rich) | String |

| Text (Encrypted) | String |

| URL | String |

Lookup activity properties

To learn details about the properties, check Lookup activity.

Next steps

For a list of data stores supported as sources and sinks by the copy activity in Data Factory, see Supported data stores.

How to load data into Salesforce objects?

I have created my salesforce application and I have to enter my data into Salesforce. I have only 5 records in one of my objects, so I can simply go to the tab and will create records in Salesforce. But in one of my object I need to load bulk (thousands of) records, to enter thousand of records will take more time by entering manually and also it is very difficult and no one will enter thousands of records manually. Here I am providing information about how to load data into salesforce by using automated tools.

Salesforce Apex Data Loader

Salesforce providing two ways to load-data into Sobjests. One way is “load data by using import wizard from your setup menu” and another way is load data by using data loader.

Import wizard:

To work with the import wizard no need to install any tool, directly from setup menu and we can use it. By using import wizard we can load data of Accounts, Contacts, Leads, Solutions and Custom Objects.

- Using import wizard we can load data up to 50,000 records.

- We cannot load duplicate records by using import wizard.

- We can also schedule exports from set up menu export data under data management.

Data Loader Salesforce Mac Download Softonic

Data Loader

Data Loader is a tool provided by Salesforce. We can download this from the setup menu. By using data loader we can perform Insert, Update, Upsert, Delete, Hard delete, Export & Export All operations.

- We can load 5,00,000 records at a time by using data loader.

- And also by using command line interface, we can schedule data loads.

- By using data loader we can load data for all Sobjects.

Working with Import wizard to load data:

Data Loader Salesforce Mac Download Version

In salesforce to navigate to import wizard go to Setup -> Administer -> Data management

Here I will explain to you how to use import wizard and other options to load data into Salesforce objects:

Below operations, we can do from the standard setup menu. To understand this go to below topics.

Working with data loader to load data into Sobjects

Salesforce Data Loader 42

We can use data loader to load-data up to 5,00,000 records. By using data loader we can load any Sobject records. To know more about data loader go through below topic.

Data Loader Salesforce Download Windows 10

Many other topics are there related to this. I will update this page later.